This blog update will be about Procedural Sound Design, defining what it is, how it functions, what the use cases are, limitations and why procedural sound design is used in video games.

What is Procedural Sound Design in terms of video games? “Procedural Sound Design is about sound design as a system, an algorithm, or a procedure that re-arranges, combines or manipulates sound assets”(Stevens and Raybould, 2016) It is the concept of audio becoming a dynamic and interactive experience for the player, this is typically achieved by parameterisation within a system such as a game engine or MAX MSP. The main benefits are to achieve efficiency, detailed control, variation and flexibility in the Sound Design.

Whilst doing the initial research for this blog post, it became apparent that the term procedural sound design is not comprehensively definable. Misconceptions about

Procedural audio, Procedural synthesis and Procedural music are all prevalent. It could be said there is a matter of opinion to either define the exact differences or not to concern yourself with them as long as the resulting audio works as intended, however you wish to define the process. “Andy Farnell, who coined or at least popularised the term ‘Procedural Audio’ sees it as any kind of system where the sound produced is the result of a process…” “... So under that definition, as soon as you set up any kind of system of playback you could see it as being procedural audio.” (Asbjoern Andersen. 2016.)

Why use a Procedural approach to sound?

These sound systems are essentially a list of instructions that recall audio files/create synthesis in specific ways and this means it is an adaptable frame work that can be repurposed for mechanically similar systems.

See fig 1 for an example of a car system created by Andy Farnell

Fig 1 at 9.30

https://www.youtube.com/watch?v=-Ucv7EXwnCM&list=PLLHtPBwbWUW5EG-4ajfz5BQBOIm31ClhC&index=5

Andy goes on to say that this system can be reused for another type of car by using this existing framework

“ …What we’re doing now is moving from a state based interaction model to a continuous interaction model…” “…In the tests that I’ve done with players, its the interactivity of the object, the way it responds to your input which defines realism, realism is not a sonic quality, realism is a behavioral quality.” (Matthew Cheshire. 2013, part 4 at 11.05)

Flexibility in terms of future proofing your sounds as they relate to other in game assets that maybe changed by another member of a development team such as a character animation is a possible consideration. My point is that it is easier to tweak a system to get a slight timing adjust on a sound than it is to go back to a DAW and rework the asset from this point. “Procedural Audio is a philosophy about sound being a process and not data. In its broadest sense, if I were to say it is the philosophy of sound design in a dynamic space, and the method is as irrelevant to Procedural Audio as whether you use oils or water colours is to painting.” (Andy Farnell, 2012) Andy Farnell states that whichever method the sound designer gets the desired result using is the correct one, and that procedural audio is just another tool available along side traditional methods of sound design

Limitations have always been a factor when creating sound for video games. When computer games were first being created the specific sound chip for the platform being developed on was the limitation. Then at the start of the CD era of gaming with the Playstation™ it was having sounds loading from the disk responsively enough. Today the main limitations will be platform specific hardware restrictions in terms of how much RAM and processing power is needed for the audio system to run.

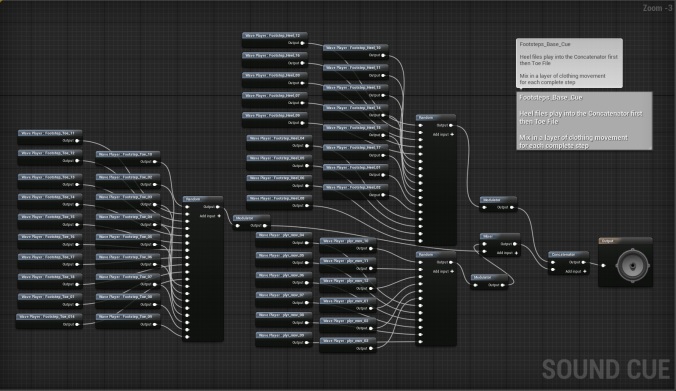

Above is an example of a footstep Sound Cue for Unreal Engine 4.

Above is an example of a footstep Sound Cue for Unreal Engine 4.

Inherently having lots of different variations of sounds will add to the memory used in the audio departments memory budget, and this is where a lot of the benefits can be found for a procedural system. In Unreal Engine 4 the ability to control the sound cues adds the ability to control single shot assets as you can alleviate the need for larger looping sections of audio for example. For this footstep example the Heel and the Toe sections of the foot steps have been separated and recombined randomly by the system, this technique along with adding modulators for pitch and volume create a larger variation of sounds than at all possible with conventions means.

“In general, the fact that with procedural audio not all voices are created equal – and that some patches will use more CPU cycles than others – is usually not a very good selling point against the predictability of a fixed voice architecture.” (Nicolas Fournel,2012)

Bibliography

Stevens, R. and Raybould, D (2016) Game Audio Implementation. Edited by `Dave Raybould. First edn. 6000 Broken Sound Parkway NW,Suite 300 Boca Raton, FL: CRC Press/Taylor and Francis.

designingsound.org Varun Nair. 2012. Procedural Audio: Interview with Andy Farnell. [ONLINE] Available at: http://designingsound.org/2012/01/procedural-audio-interview-with-andy-farnell/. [Accessed 13 February 2017].

designingsound.org Varun Nair. 2012. Procedural Audio: An Interview with Nicolas Fournel

[ONLINE] Available at: http://designingsound.org/2012/06/procedural-audio-an-interview-with-nicolas-fournel/ . [Accessed 13 February 2017].

ASoundEffect.com Asbjoern Andersen. 2016. WHY PROCEDURAL GAME SOUND DESIGN IS SO USEFUL – DEMONSTRATED IN THE UNREAL ENGINE. [ONLINE] Available at: https://www.asoundeffect.com/procedural-game-sound-design/ . [Accessed 13 February 2017].

Matthew Cheshire. (2013). Andy Farnell designing sound procedural / computational audio lecture part 1-5. [Online Video]. 7 March 2013. Available from: part 1 https://www.youtube.com/playlist?list=PLLHtPBwbWUW5EG-4ajfz5BQBOIm31ClhC , part 4 https://www.youtube.com/watch?v=kwK7OSkg4Gs&list=PLLHtPBwbWUW5EG-4ajfz5BQBOIm31ClhC&index=4 .part 5 9.30 https://www.youtube.com/watch?v=-Ucv7EXwnCM&list=PLLHtPBwbWUW5EG-4ajfz5BQBOIm31ClhC&index=5 [Accessed: 13 February 2017].